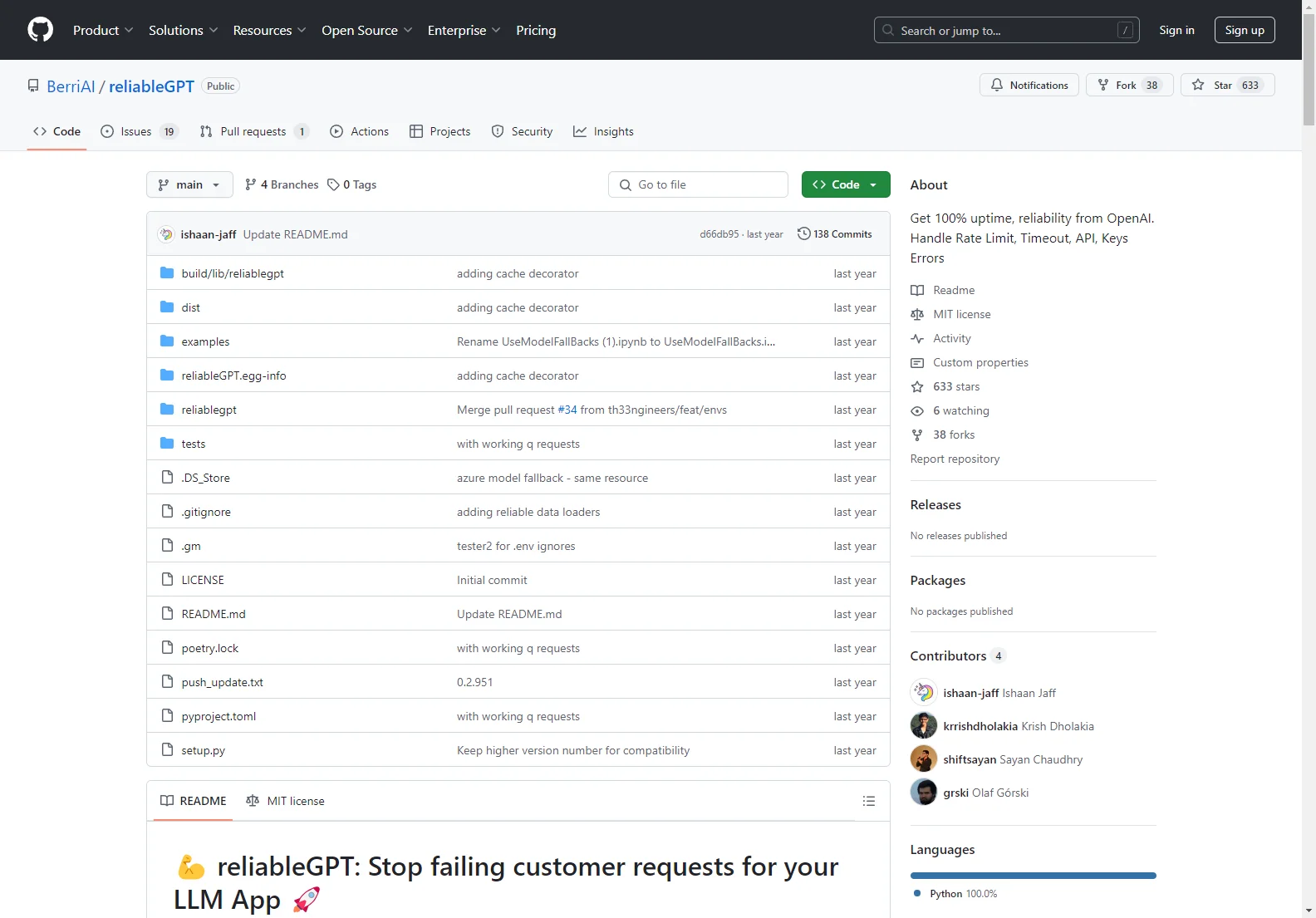

reliableGPT: Ensuring 100% Uptime for Your LLM Application

This markdown file provides a comprehensive overview of reliableGPT, a powerful tool designed to maximize the reliability and uptime of your Large Language Model (LLM) applications. It addresses common issues such as rate limits, timeouts, API key errors, and context window limitations, ensuring a seamless user experience.

Key Features and Benefits

- Zero Downtime: reliableGPT minimizes disruptions by implementing robust error handling and fallback mechanisms.

- Multi-Model Support: It seamlessly integrates with various models, including GPT-4, GPT-3.5, and others, automatically switching to available alternatives when necessary.

- Context Window Management: Handles context window errors by intelligently retrying requests with models offering larger context windows.

- API Key Rotation: Supports multiple API keys, automatically rotating to a working key if one becomes invalid.

- Caching: Implements a caching system to serve cached responses during high-traffic periods or when other methods fail, ensuring continuous service.

- Comprehensive Error Handling: Addresses various error types, including rate limits, timeouts, and invalid API keys.

- Azure OpenAI Integration: Supports Azure OpenAI, allowing for seamless fallback to OpenAI if Azure encounters issues.

- User-Friendly Interface: Simple integration with existing LLM applications requires minimal code changes.

- Monitoring and Alerts: Provides email alerts to keep you informed about potential issues and error spikes.

How reliableGPT Works

reliableGPT acts as a wrapper around your existing LLM API calls. When a request fails, it automatically attempts the following:

- Retry with Alternate Models: It tries different models from a predefined fallback strategy until a successful response is received.

- Larger Context Window Models: For context window errors, it switches to models with larger context windows.

- API Key Rotation: If an API key becomes invalid, it automatically tries other available keys.

- Caching: If all else fails, it returns a cached response based on semantic similarity, ensuring minimal disruption to the user experience.

Integration and Usage

Integrating reliableGPT into your application is straightforward. The core functionality often involves a single line of code, replacing your existing LLM API call with the reliableGPT wrapper. Detailed instructions and examples are available in the project's documentation.

Advanced Features

- Custom Fallback Strategies: Define your preferred order of models for retries.

- Backup API Keys: Provide multiple OpenAI or Azure API keys for redundancy.

- Caching Configuration: Customize caching behavior to suit your application's needs.

- Thread Management: Control the number of concurrent threads to handle requests efficiently.

Comparisons with Other Solutions

While other libraries offer some error handling, reliableGPT stands out with its comprehensive approach, combining multiple strategies to ensure maximum uptime and minimal service disruptions. It goes beyond simple retries by incorporating intelligent model selection, API key management, and caching for a truly robust solution.

Conclusion

reliableGPT is an invaluable tool for developers building LLM applications that require high availability and reliability. Its ease of integration, comprehensive error handling, and advanced features make it a must-have for ensuring a seamless user experience.