The Local AI Playground: Your Offline AI Sandbox

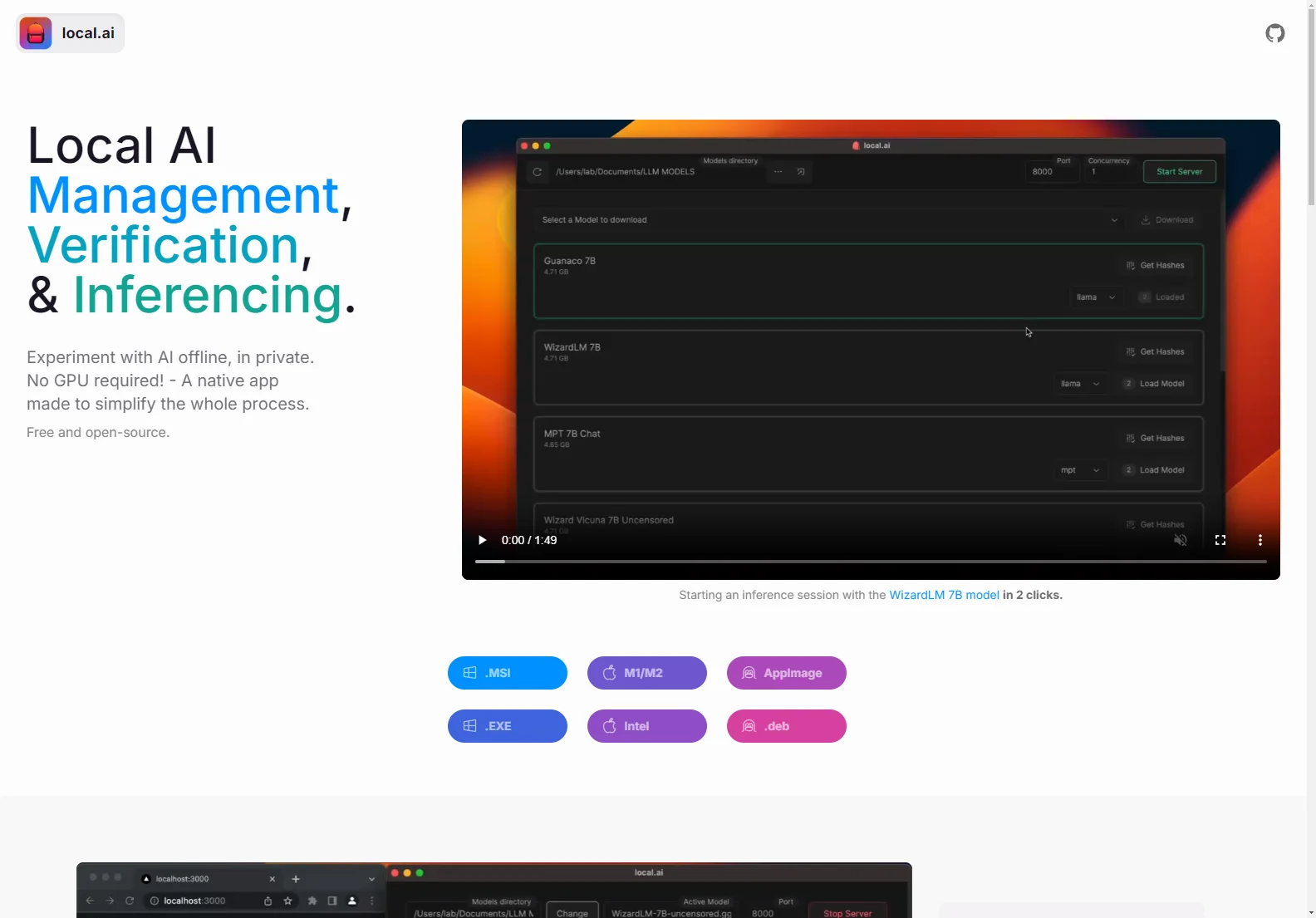

Local.ai is a free and open-source native application designed to simplify the process of managing, verifying, and running AI models offline. No GPU is required, making it accessible to a wider range of users. This powerful tool allows you to experiment with AI privately and securely, without relying on cloud services.

Key Features

Model Management:

- Centralized Location: Keep track of all your AI models in one place. Choose any directory to store your models.

- Resumable Downloads: Download models efficiently, with the ability to resume interrupted downloads.

- Usage-Based Sorting: Organize your models based on usage frequency.

- Directory Agnostic: Works seamlessly across different directories.

- Upcoming Features: Nested directory support, custom sorting and searching.

Digest Verification:

- Integrity Assurance: Ensure the integrity of your downloaded models using robust BLAKE3 and SHA256 digest computation.

- Known-Good Model API: Access a library of verified models.

- Model Info Cards: Quickly access essential information about each model.

- Upcoming Features: Model explorer, model search, and model recommendations.

CPU Inferencing:

- Adaptable to Threads: Efficiently utilizes available CPU threads for inferencing.

- GGML Quantization: Supports various quantization levels (q4, q5.1, q8, f16) for optimized performance.

- Upcoming Features: GPU inferencing and parallel session support.

Inferencing Server:

- Easy Setup: Start a local streaming server for AI inferencing in just two clicks.

- User-Friendly Interface: A simple and intuitive interface for quick inference.

- Supports Various Output Formats: Writes inference results to .mdx files and supports audio and image processing.

- Inference Parameters: Fine-tune your inference parameters for optimal results.

- Remote Vocabulary: Access and utilize remote vocabularies.

- Upcoming Features: Server management.

Comparison to Other AI Tools

Unlike cloud-based AI solutions, Local.ai prioritizes privacy and offline accessibility. It eliminates the need for internet connectivity and reduces reliance on third-party services. This makes it ideal for users who value data security and want to experiment with AI without compromising their privacy. Compared to other offline AI tools, Local.ai stands out due to its user-friendly interface, comprehensive model management features, and support for various quantization levels.

Getting Started

Local.ai is available for various operating systems, including Windows, macOS, and Linux. Download the appropriate installer for your system and follow the simple installation instructions. The application is designed to be intuitive and easy to use, even for beginners.

Conclusion

Local.ai provides a powerful and accessible way to explore the world of AI offline. Its focus on privacy, ease of use, and comprehensive features makes it a valuable tool for both beginners and experienced AI users. The ongoing development and addition of new features promise an even more robust and versatile platform in the future.