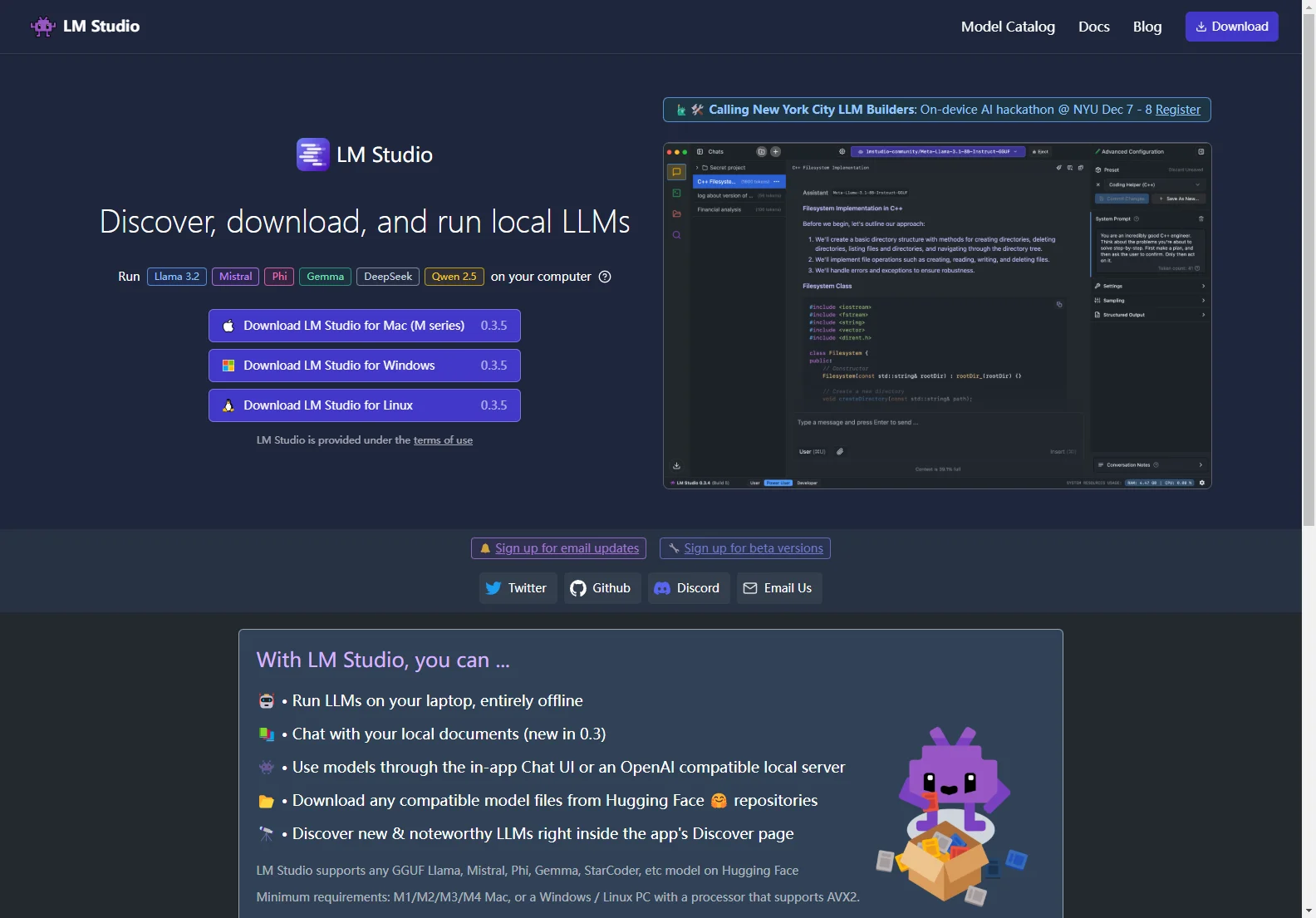

LM Studio: Run Local LLMs on Your Computer

LM Studio is a powerful application that lets you run large language models (LLMs) directly on your laptop, completely offline. This means enhanced privacy, no reliance on internet connectivity, and faster processing for many tasks. This article will explore LM Studio's key features, supported models, and its overall capabilities.

Key Features

- Offline LLM Execution: The core functionality of LM Studio is its ability to run LLMs locally, eliminating concerns about data privacy and internet dependence.

- Model Support: LM Studio boasts compatibility with a wide range of LLMs, including Llama 3.2, Mistral, Phi, Gemma, DeepSeek, and Qwen 2.5, all readily available through Hugging Face. Support for additional models is continuously expanding.

- Document Chat: A standout feature is the ability to chat with your local documents. This allows for efficient information retrieval and summarization from your own files.

- User-Friendly Interface: LM Studio provides an intuitive interface, making it accessible to both experienced users and newcomers to the world of LLMs. The app features a clear chat UI and an OpenAI-compatible local server for seamless integration with existing workflows.

- Model Discovery: The app's integrated Discover page helps you find and download new and noteworthy LLMs directly within the application.

Supported Models

LM Studio supports models in the GGUF format. This includes, but isn't limited to:

- Llama

- Mistral

- Phi

- Gemma

- StarCoder

Many more models are added regularly. Check the app's Discover page for the latest additions.

System Requirements

LM Studio requires a minimum of an M1/M2/M3/M4 Mac, or a Windows/Linux PC with a processor supporting AVX2 instructions.

Privacy

LM Studio prioritizes user privacy. It does not collect any data or monitor your actions. All processing happens locally on your machine, ensuring your data remains confidential.

Business Use

LM Studio is free for personal use. For business applications, please contact the developers to discuss licensing options.

Conclusion

LM Studio offers a compelling solution for those seeking to leverage the power of LLMs without compromising privacy or relying on internet connectivity. Its user-friendly interface, extensive model support, and commitment to user privacy make it a valuable tool for both personal and professional use. The ongoing development and addition of new features promise to further enhance its capabilities in the future.