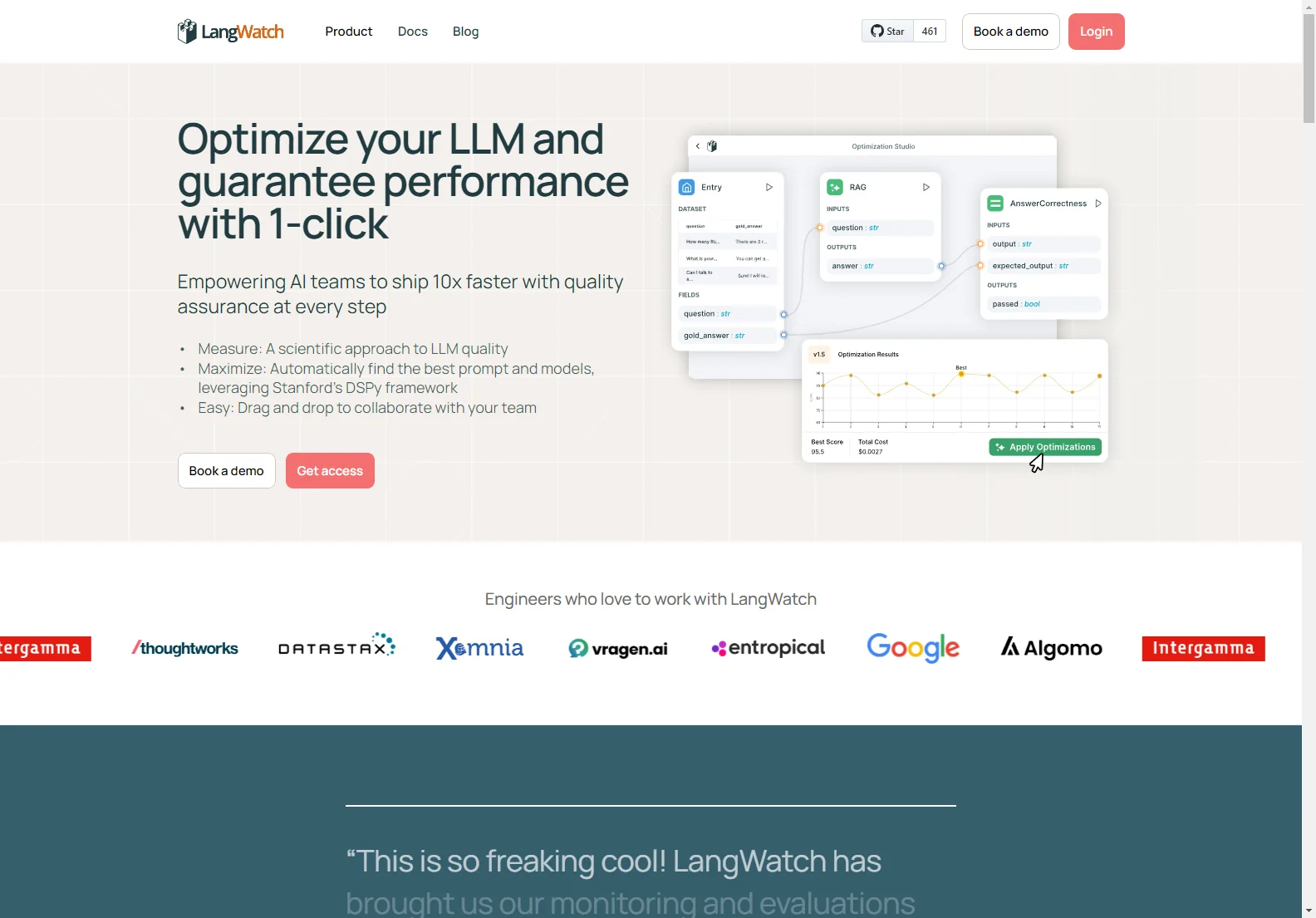

LangWatch - LLM Optimization Studio

LangWatch is a revolutionary platform designed to empower AI teams to build and deploy Large Language Model (LLM) applications significantly faster, while ensuring high quality and performance at every step. It tackles the challenges of LLM development head-on, offering a comprehensive suite of tools for measurement, optimization, and collaboration.

Key Features

- Measure: LangWatch provides a scientific approach to LLM quality assessment. It allows you to evaluate your entire pipeline, not just individual prompts, enabling the creation of highly reliable components, similar to unit testing for LLMs. This includes measuring performance, latency, cost, and debugging messages and outputs.

- Maximize: Leveraging the power of Stanford's DSPy framework, LangWatch automatically finds the best prompts and models, dramatically reducing the time spent on manual optimization.

- Easy Collaboration: The platform's intuitive drag-and-drop interface facilitates seamless collaboration among team members, regardless of their technical expertise. This allows for easy integration of domain experts from various departments (Legal, Sales, Customer Support, etc.).

- Comprehensive Monitoring: LangWatch offers robust monitoring capabilities, providing real-time insights into your LLM application's performance, including debugging, cost tracking, annotations, alerts, and detailed datasets.

- Advanced Analytics: Access in-depth analytics dashboards to visualize performance data, identify trends, and make data-driven decisions. This includes topic analysis, event tracking, and custom graph creation.

- Robust Evaluations & Guardrails: LangWatch includes advanced features like jailbreak detection and RAG quality assessment to ensure the safety and reliability of your LLM applications.

- Seamless Integration: The platform integrates easily into any tech stack, supporting a wide range of LLMs (OpenAI, Claude, Azure Gemini, Hugging Face, Groq) and tools (LangChain, DSPy, Vercel AI SDK, LiteLLM, OpenTelemetry, LangFlow).

- Enterprise-Grade Security: LangWatch prioritizes security and compliance, offering self-hosted deployment options, role-based access controls, and GDPR compliance (working towards ISO27001).

Benefits

- 10x Faster Development: Automate the process of finding the optimal prompts and models, significantly accelerating your development cycle.

- Guaranteed Quality: Implement rigorous quality assurance measures at every stage of development, ensuring reliable and high-performing applications.

- Enhanced Collaboration: Streamline collaboration among team members, fostering a more efficient and productive workflow.

- Data-Driven Insights: Gain valuable insights into your LLM application's performance through comprehensive monitoring and analytics.

- Improved Safety and Compliance: Mitigate risks and ensure compliance with industry standards and regulations.

Use Cases

- Optimize RAG: Improve the accuracy and efficiency of your Retrieval Augmented Generation (RAG) systems.

- Agent Routing: Optimize the routing of customer inquiries to the most appropriate agents.

- Categorization Accuracy: Improve the accuracy of automated categorization tasks.

- Structured Vibe-Checking: Ensure consistent and appropriate tone and style in your LLM outputs.

- Custom Evaluations: Build and deploy your own custom evaluation metrics.

Conclusion

LangWatch is more than just an LLM optimization tool; it's a comprehensive platform that addresses the entire lifecycle of LLM application development. By combining automation, collaboration, and robust monitoring capabilities, LangWatch empowers AI teams to ship high-quality applications significantly faster, gaining a competitive advantage in the rapidly evolving landscape of AI.