RAG: Revolutionizing AI with Retrieval-Augmented Generation

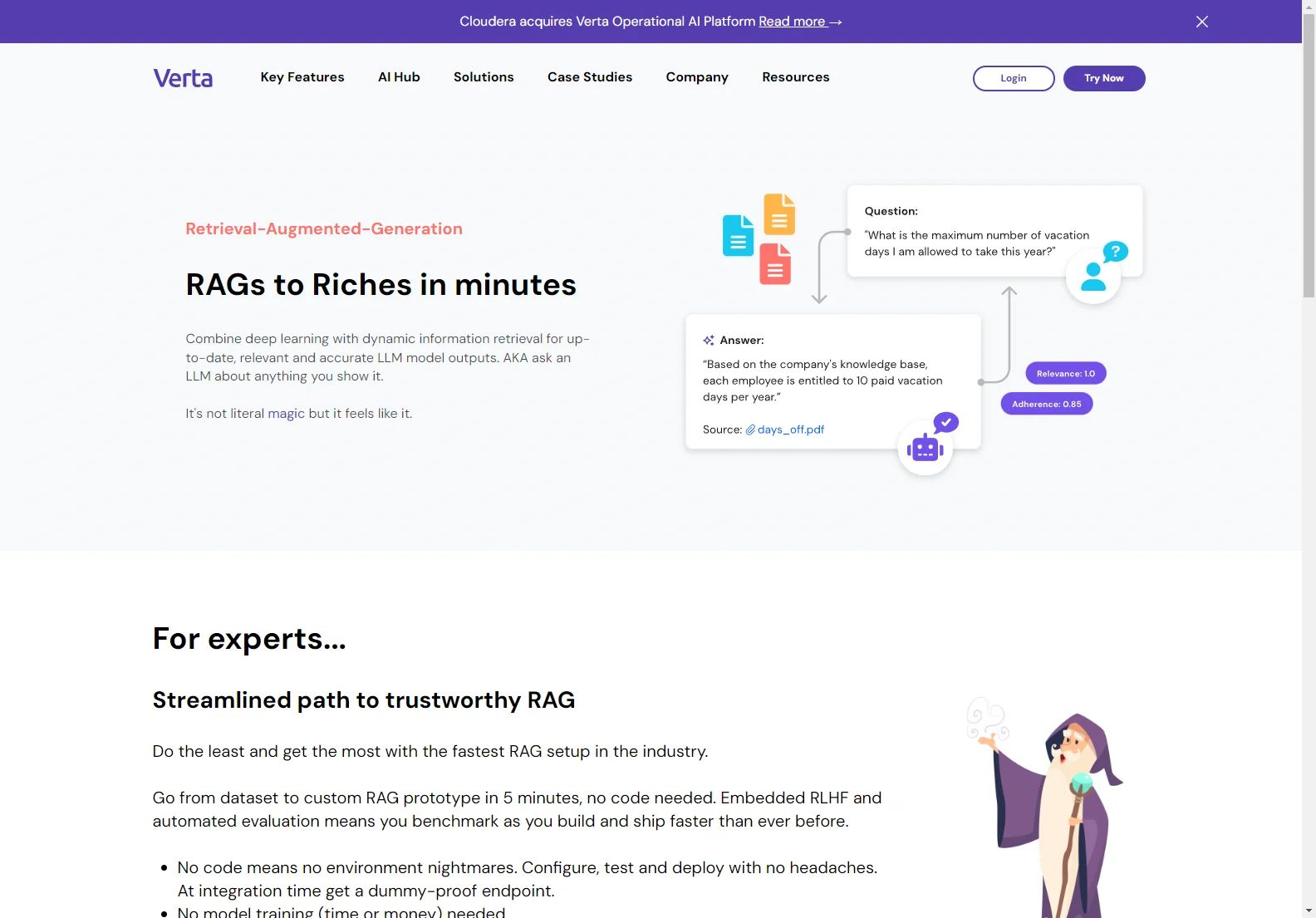

Retrieval-Augmented Generation (RAG) is transforming how we interact with AI. This innovative approach combines the power of large language models (LLMs) with dynamic information retrieval, resulting in more accurate, relevant, and up-to-date AI-generated content. Imagine asking an LLM a question and receiving an answer grounded in the specific data you provide—that's the magic of RAG.

Key Features of RAG

- Up-to-date Information: Unlike traditional LLMs that rely on static training data, RAG accesses and processes current information, ensuring answers are always relevant.

- Enhanced Accuracy: By grounding responses in specific data sources, RAG significantly reduces the risk of hallucinations and inaccuracies often associated with LLMs.

- Customizable Knowledge Base: You can easily integrate your own proprietary data, such as PDFs, spreadsheets, and databases, to create a bespoke knowledge base for your AI system.

- Streamlined Workflow: RAG simplifies the process of building AI-powered solutions, even without extensive machine learning expertise. Rapid prototyping and deployment are key advantages.

- Automated Evaluation: Built-in features allow for continuous benchmarking and improvement of your RAG system.

- Easy Maintenance and Scaling: The user-friendly interface enables easy updates to the knowledge base and effortless scaling to meet growing demands.

- Hallucination Detection: RAG provides built-in mechanisms to detect and flag potential hallucinations, ensuring trustworthy outputs.

Benefits of Using RAG

- Access to Private Data: Leverage your confidential and proprietary data sources to generate AI-driven insights.

- Improved Relevance: Get answers directly related to your specific knowledge base, eliminating irrelevant or generic responses.

- Timely Results: RAG ensures that your AI system always has access to the most current information.

- Simplified Development: Build sophisticated AI solutions without the need for extensive machine learning expertise.

How RAG Works

RAG operates by combining two key components:

- Information Retrieval: This stage involves querying a vector database to identify relevant documents based on the user's input.

- Large Language Model Generation: The retrieved documents are then fed into an LLM, which uses this context to generate a comprehensive and accurate response.

Real-World Applications of RAG

RAG has a wide range of applications across various industries, including:

- Customer Support: Provide accurate and timely answers to customer queries based on your company's knowledge base.

- Internal Knowledge Sharing: Enable employees to quickly access relevant information and expertise within the organization.

- Research and Development: Accelerate research by providing access to relevant publications and data.

- Financial Analysis: Generate insights based on real-time market data and financial reports.

Conclusion

RAG represents a significant advancement in AI technology. By combining the power of LLMs with dynamic information retrieval, RAG offers a powerful and efficient way to generate accurate, relevant, and up-to-date information. Its ease of use and wide range of applications make it a valuable tool for businesses and researchers alike.