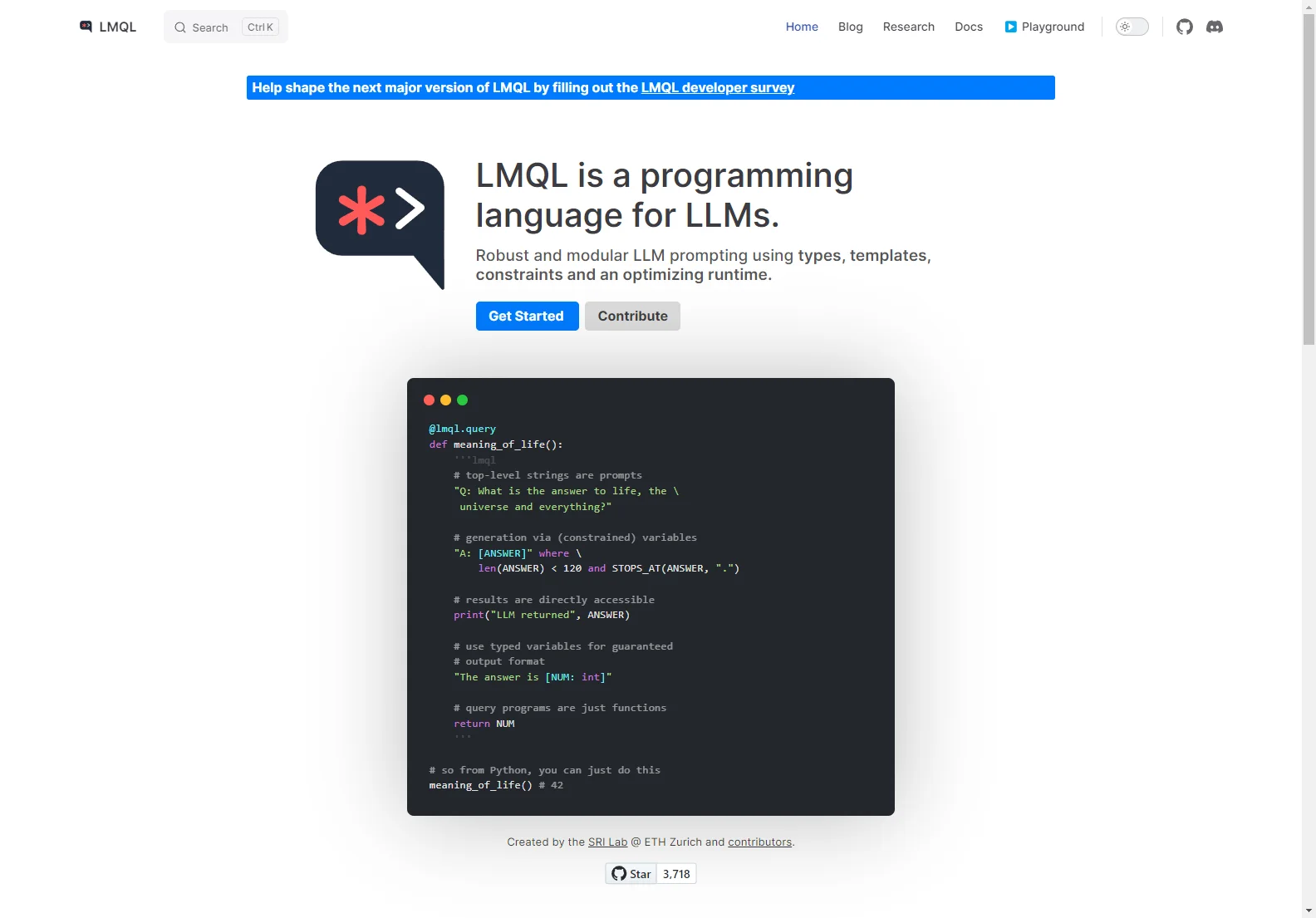

LMQL: A Programming Language for LLMs

LMQL (Large Language Model Query Language) is a novel approach to interacting with large language models (LLMs). It transcends the limitations of simple prompting by offering a full-fledged programming language designed specifically for LLM interaction. This allows for robust, modular, and highly controllable prompting, leading to more reliable and predictable results.

Key Features of LMQL

- Types and Templates: Define the expected structure and format of your LLM responses using types, ensuring consistency and facilitating easier data processing. Templates provide a structured way to build prompts, making them more organized and reusable.

- Constraints: Specify constraints on the LLM's output, such as length limits, specific keywords, or format requirements. This helps to guide the LLM towards generating responses that meet your exact needs.

- Optimizing Runtime: LMQL includes an optimizing runtime that enhances the efficiency and performance of your LLM interactions, minimizing latency and resource consumption.

- Nested Queries: Modularize your prompts by creating nested queries, enabling the reuse of prompt components and promoting better organization of complex prompting tasks.

- Multi-Part Prompts: Handle complex tasks by breaking them down into smaller, manageable prompts. LMQL facilitates the seamless integration of these parts into a cohesive whole.

- Python Support: LMQL integrates seamlessly with Python, allowing you to leverage the power of Python's extensive libraries and control flow for more sophisticated prompt engineering.

- Backend Portability: LMQL abstracts away the underlying LLM backend, allowing you to easily switch between different providers (e.g., OpenAI, Llama.cpp, Hugging Face Transformers) with minimal code changes.

Use Cases for LMQL

LMQL's versatility makes it suitable for a wide range of applications, including:

- Complex Data Extraction: Extract structured information from unstructured text data with greater accuracy and control.

- Chatbot Development: Build more sophisticated and robust chatbots with improved response consistency and reliability.

- Automated Report Generation: Generate reports from LLM outputs with predefined formats and constraints.

- Data Analysis and Visualization: Process and analyze LLM-generated data using Python libraries.

- Multi-Step Prompting: Manage complex tasks that require multiple LLM interactions.

Comparison with Traditional Prompting

Traditional prompting often relies on trial-and-error to achieve desired results. LMQL provides a more structured and predictable approach, reducing the need for iterative refinement and improving the overall efficiency of LLM interaction. Its type system and constraint mechanisms ensure greater consistency and reliability in the LLM's responses.

Getting Started with LMQL

LMQL is designed to be user-friendly and accessible. Its intuitive syntax and comprehensive documentation make it easy to learn and use, even for those with limited programming experience. The project's website provides detailed tutorials and examples to help you get started quickly.

Conclusion

LMQL represents a significant advancement in LLM interaction, offering a powerful and flexible programming language for building sophisticated and reliable LLM-powered applications. Its focus on modularity, type safety, and constraint enforcement sets it apart from traditional prompting methods, paving the way for more advanced and efficient LLM applications.