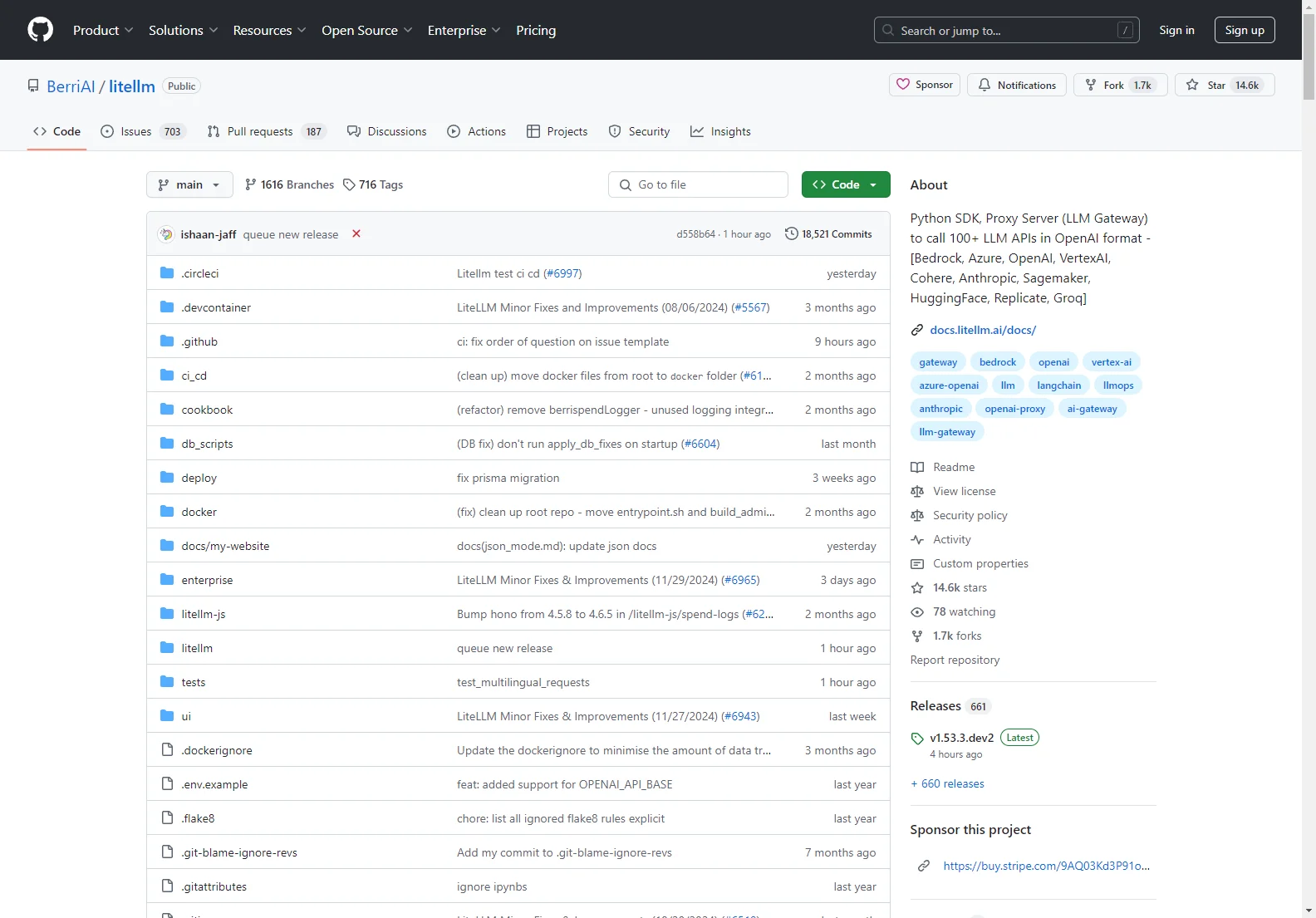

LiteLLM: A Unified Interface for 100+ Large Language Models

LiteLLM is a powerful Python SDK and proxy server that simplifies access to over 100 Large Language Model (LLM) APIs. It provides a consistent OpenAI-compatible interface, allowing developers to easily switch between different providers like OpenAI, Azure, Bedrock, Vertex AI, Cohere, and many more, without modifying their code significantly. This significantly reduces the complexity of managing multiple LLM APIs and streamlines the development process.

Key Features

- Unified API: Interact with diverse LLMs using a single, familiar OpenAI-style API.

- Proxy Server: A hosted proxy simplifies deployment and management, offering features like authentication hooks, logging, cost tracking, and rate limiting.

- Multi-Provider Support: Seamlessly integrates with major LLM providers, including OpenAI, Azure, AWS Bedrock, Google Vertex AI, Cohere, Anthropic, and many others.

- Streaming Support: Enables real-time streaming of LLM responses for improved user experience.

- Asynchronous Operations: Supports asynchronous calls for enhanced performance and efficiency.

- Retry and Fallback Logic: Ensures robustness by automatically retrying failed requests and falling back to alternative providers.

- Budget and Rate Limiting: Control costs and manage API usage effectively.

- Extensible Logging: Integrate with various logging tools like Lunary, Langfuse, and more for comprehensive observability.

Use Cases

LiteLLM is suitable for a wide range of applications, including:

- Building AI-powered applications: Quickly integrate LLMs into your projects without worrying about provider-specific APIs.

- Research and development: Experiment with different LLMs to find the best fit for your needs.

- Streamlining workflows: Simplify LLM integration into existing workflows and pipelines.

- Cost optimization: Manage and control LLM costs effectively.

Comparison with Other Tools

While other tools offer LLM access, LiteLLM distinguishes itself through its comprehensive support for numerous providers, its consistent API, and its built-in proxy server with advanced features like cost tracking and rate limiting. This makes it a more robust and versatile solution for developers working with multiple LLMs.

Getting Started

- Install LiteLLM:

pip install litellm - Set API keys for your preferred providers.

- Use the simple API to call any supported model.

Conclusion

LiteLLM simplifies the complexities of working with multiple LLMs, providing a unified and efficient solution for developers. Its comprehensive features and extensive provider support make it an invaluable tool for anyone building AI-powered applications.