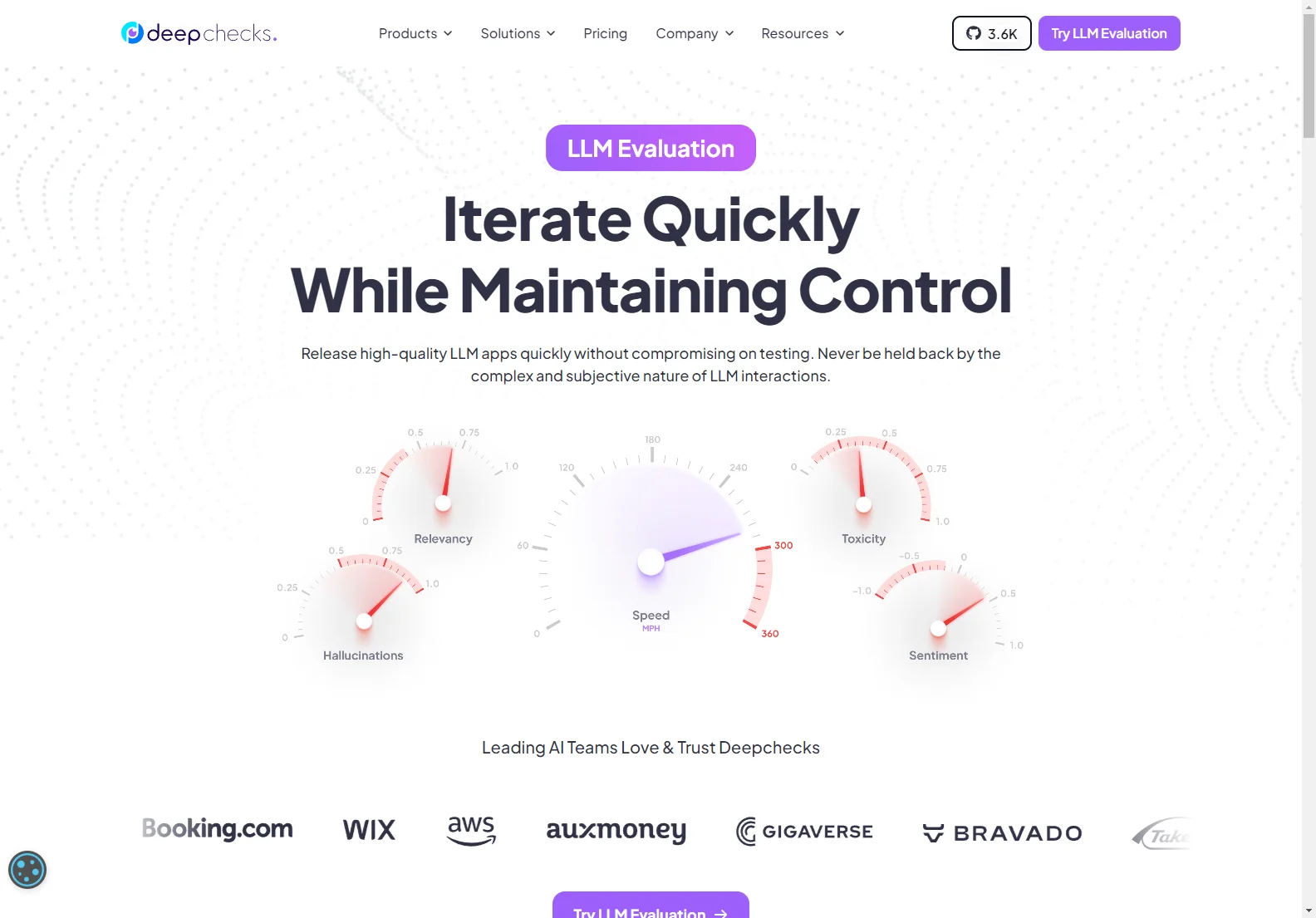

Deepchecks: Streamlining LLM App Evaluation

Deepchecks is a powerful platform designed to significantly improve the efficiency and effectiveness of evaluating Large Language Model (LLM) applications. It tackles the inherent complexities of assessing generative AI outputs, offering a systematic approach to quality control and compliance.

The Challenge of LLM Evaluation

Evaluating LLMs presents unique hurdles. The subjective nature of generated text makes manual assessment time-consuming and prone to inconsistencies. A seemingly minor alteration can drastically change the meaning or accuracy of the response. Furthermore, releasing an LLM app requires addressing numerous potential issues, including:

- Hallucinations: The generation of factually incorrect information.

- Bias: The perpetuation of unfair or discriminatory viewpoints.

- Policy deviations: Content that violates established guidelines.

- Harmful content: Outputs that are offensive, dangerous, or inappropriate.

Deepchecks provides a solution to these challenges.

Deepchecks' Systematic Approach

Deepchecks automates the evaluation process, reducing the reliance on manual labor. It leverages a robust, open-source foundation used by thousands of companies, ensuring reliability and scalability. Key features include:

- Automated Evaluation: Quickly assess large volumes of LLM outputs.

- Golden Set Optimization: Efficiently manage and utilize golden sets (test sets for GenAI) to improve accuracy and reduce manual annotation time.

- Comprehensive Checks: Identify and mitigate various issues, from hallucinations to bias and policy violations.

- Continuous Monitoring: Track model performance over time to maintain quality and identify potential problems.

Benefits of Using Deepchecks

By using Deepchecks, developers can:

- Iterate Faster: Release high-quality LLM apps more quickly.

- Reduce Costs: Minimize the time and resources spent on manual evaluation.

- Improve Quality: Ensure that LLM apps meet high standards of accuracy, safety, and compliance.

- Maintain Control: Retain control over the evaluation process and easily address identified issues.

Deepchecks and the LLMOps Community

Deepchecks is a founding member of LLMOps.Space, a vibrant community dedicated to advancing the field of LLM operations. This involvement underscores Deepchecks' commitment to collaboration and innovation within the LLM ecosystem.

Conclusion

Deepchecks offers a comprehensive and efficient solution for evaluating LLM applications. Its systematic approach, automated processes, and open-source foundation make it an invaluable tool for developers seeking to build and deploy high-quality, reliable LLM-powered products.