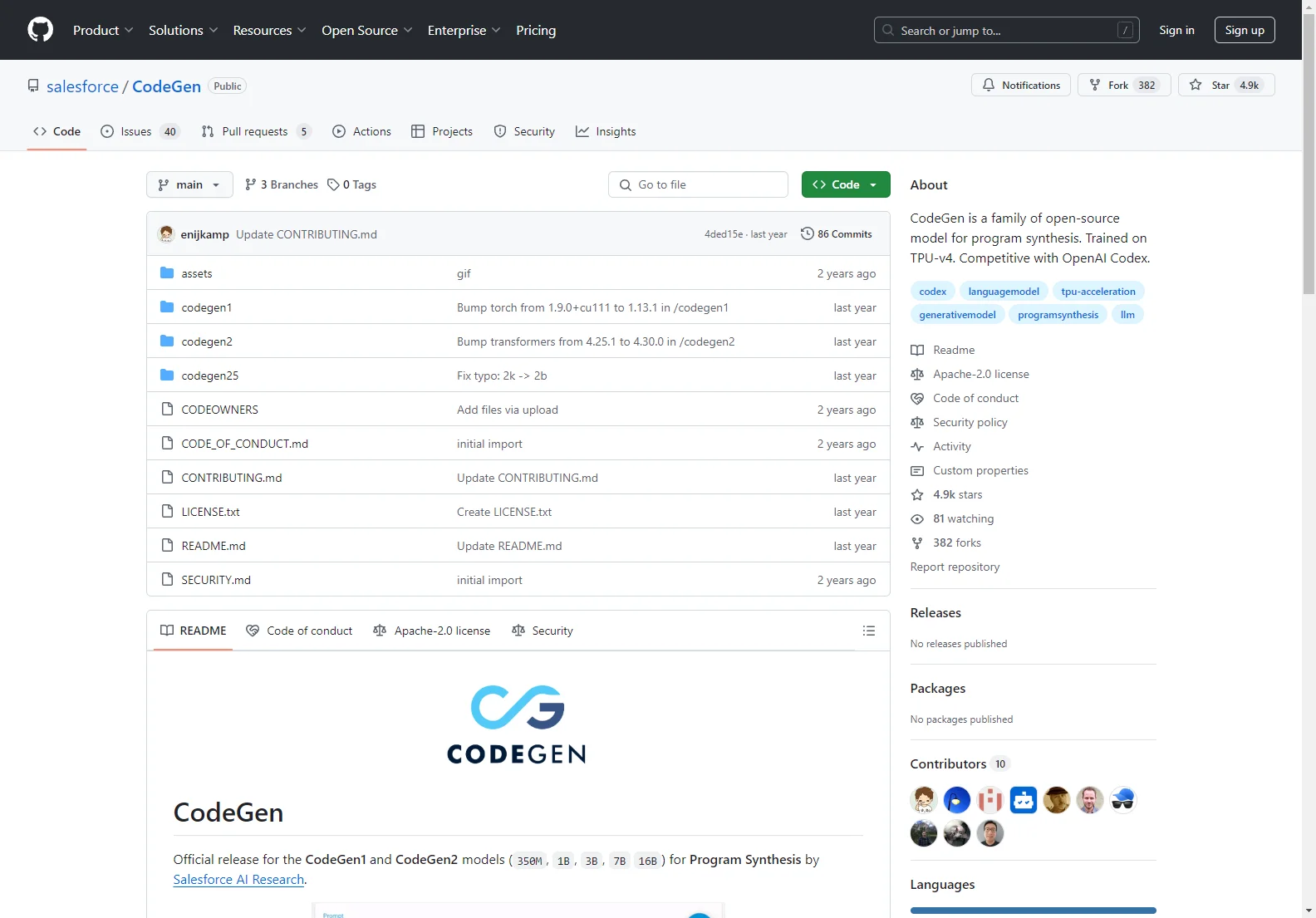

CodeGen: An Open-Source Model for Program Synthesis

CodeGen is a family of open-source models developed by Salesforce AI Research for program synthesis. These models, trained on TPU-v4, offer competitive performance compared to OpenAI Codex. This article provides an overview of CodeGen's capabilities, usage, and associated resources.

Key Features

- Open-Source: CodeGen's open-source nature fosters community contributions and allows for broader accessibility.

- Program Synthesis: CodeGen excels at generating code from natural language descriptions, streamlining the development process.

- Multiple Model Sizes: The CodeGen family includes models of varying sizes (350M, 1B, 3B, 7B, 16B parameters), allowing users to select the model best suited to their computational resources and task complexity.

- Multi-Turn Capabilities: CodeGen supports multi-turn interactions, enabling users to refine and iterate on code generation through dialogue.

- Strong Infill Sampling: CodeGen2.0 and later versions demonstrate improved infill sampling capabilities, enhancing code completion and editing.

- Competitive Performance: CodeGen's performance is comparable to leading commercial code generation models.

Usage Examples

CodeGen models are available on the Hugging Face Hub. Here's how to use CodeGen1.0 and CodeGen2.0:

CodeGen1.0:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("Salesforce/codegen-2B-mono")

model = AutoModelForCausalLM.from_pretrained("Salesforce/codegen-2B-mono")

inputs = tokenizer("# this function prints hello world", return_tensors="pt")

sample = model.generate(**inputs, max_length=128)

print(tokenizer.decode(sample[0], truncate_before_pattern=[r"\n\n^#", "^'''", "\n\n\n"]))

CodeGen2.0:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("Salesforce/codegen2-7B")

model = AutoModelForCausalLM.from_pretrained("Salesforce/codegen2-7B", trust_remote_code=True, revision="main")

inputs = tokenizer("# this function prints hello world", return_tensors="pt")

sample = model.generate(**inputs, max_length=128)

print(tokenizer.decode(sample[0], truncate_before_pattern=[r"\n\n^#", "^'''", "\n\n\n"]))

CodeGen2.5:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("Salesforce/codegen25-7b-mono", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("Salesforce/codegen25-7b-mono")

inputs = tokenizer("# this function prints hello world", return_tensors="pt")

sample = model.generate(**inputs, max_length=128)

print(tokenizer.decode(sample[0]))

Training

The Jaxformer library is used for data preprocessing, training, and fine-tuning CodeGen models. It can be found on GitHub: (Note: This link is not functional within this JSON output due to limitations. It is included for illustrative purposes only.)

Citation

If you use CodeGen, please cite the following papers:

- Nijkamp, Erik, et al. "CodeGen: An Open Large Language Model for Code with Multi-Turn Program Synthesis." ICLR, 2023.

- Nijkamp, Erik, et al. "CodeGen2: Lessons for Training LLMs on Programming and Natural Languages." ICLR, 2023.

Conclusion

CodeGen offers a powerful and accessible open-source solution for program synthesis. Its competitive performance, multi-turn capabilities, and availability of various model sizes make it a valuable tool for developers and researchers alike.