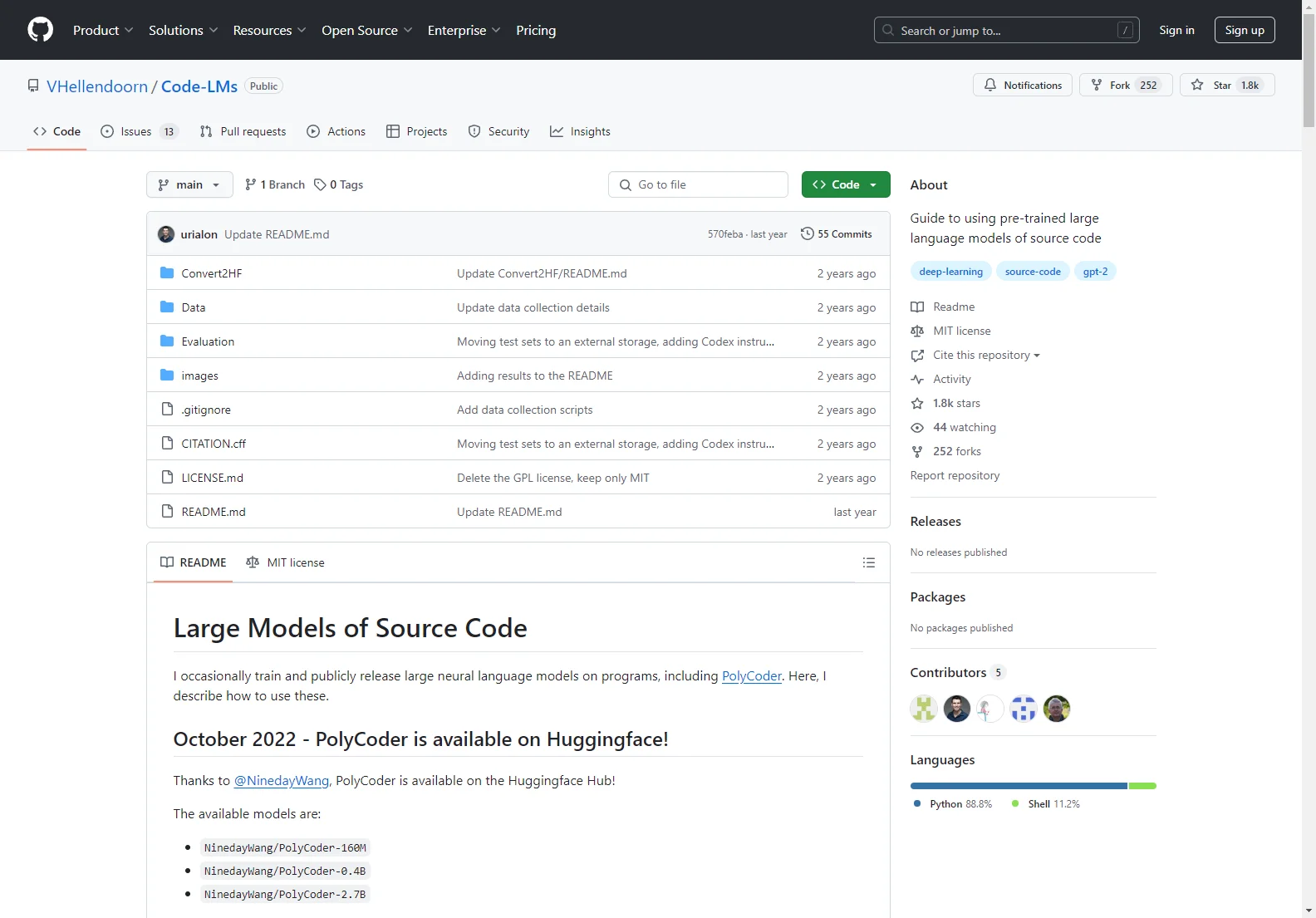

Guide to Using Pre-trained Large Language Models of Source Code

This guide provides a comprehensive overview of using pre-trained large language models (LLMs) for source code generation and analysis, focusing on the models and resources available through the Code-LMs project on GitHub.

Setup and Installation

The Code-LMs project utilizes the GPT NeoX toolkit. To get started, you'll need to download a pre-trained checkpoint from the provided Zenodo repository. These checkpoints can range in size up to 6GB and require a similar amount of GPU memory to run; CPU execution is not recommended.

You can choose to build the project from source using a forked version of the NeoX repository, or leverage a pre-built Docker image for easier setup.

From Source

The project's GitHub repository contains a fork of the GPT-NeoX repository with modifications to handle tabs and newlines in tokenization. Building from source allows for greater customization but requires familiarity with the GPT-NeoX toolkit.

Via Docker

A Docker image is available on DockerHub, simplifying the setup process. This image can be used with the downloaded checkpoint files.

Code Generation

Once the environment is set up, code generation is performed using the generate.py script. The script accepts prompts and generates code based on the provided model. Parameters such as temperature can be adjusted to control the randomness of the generated code.

Models

Several models are available, including PolyCoder (available in various sizes), trained on a large corpus of code across multiple programming languages. The models are available on HuggingFace and Zenodo.

PolyCoder

PolyCoder is a multilingual model trained on a massive dataset of code from various programming languages. It's available in different sizes (160M, 405M, and 2.7B parameters), offering a trade-off between performance and resource requirements.

Datasets

The models were trained on a 249GB multilingual corpus of code, collected from popular GitHub repositories. The dataset includes code from 12 programming languages and has been cleaned and deduplicated to improve training quality.

Evaluation

The project includes methods for evaluating the models' performance using metrics such as perplexity and HumanEval. Detailed instructions for replicating these evaluations are provided in the repository.

Caveats

The models have some limitations. They were not trained to solve programming problems directly and may not perform as well as models trained on natural language prompts. Whitespace is crucial for proper model input, and the model may generate random new files once it reaches the end of the current one.

Conclusion

The Code-LMs project offers a valuable resource for researchers and developers interested in using LLMs for code generation and analysis. The detailed instructions and available models make it a great starting point for exploring the capabilities of these powerful tools.