Stability AI's Stable Beluga 2: A Comprehensive Guide

Stable Beluga 2 is a powerful large language model (LLM) developed by Stability AI, based on the Llama 2 70B architecture. This model excels at text generation tasks, offering a significant improvement over its predecessor, Stable Beluga 1. This guide will delve into its features, usage, limitations, and ethical considerations.

Key Features and Capabilities

- High-Quality Text Generation: Stable Beluga 2 generates coherent, contextually relevant, and grammatically correct text. It can handle various creative writing tasks, including poem generation, story writing, and script creation.

- Instruction Following: The model is adept at following instructions precisely, making it suitable for tasks requiring specific formatting or style.

- Llama 2 Foundation: Built upon the robust Llama 2 70B architecture, Stable Beluga 2 benefits from its inherent strengths in language understanding and generation.

- Orca-Style Dataset Fine-tuning: Fine-tuned on an Orca-style dataset, the model exhibits enhanced reasoning and instruction-following capabilities.

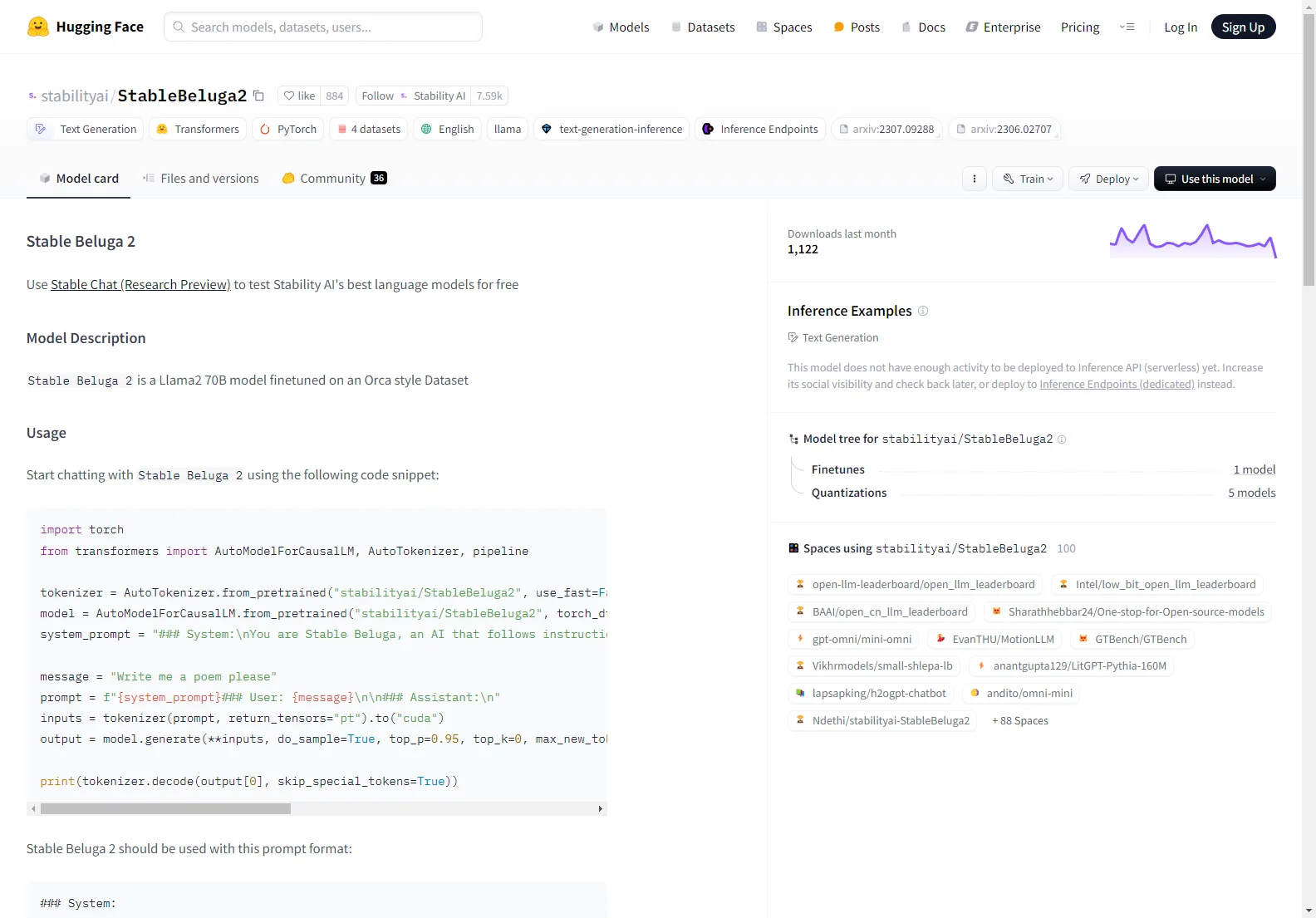

- Hugging Face Integration: Seamless integration with the Hugging Face ecosystem allows for easy access and deployment.

Usage and Implementation

Stable Beluga 2 is readily available through the Hugging Face platform. The provided code snippet demonstrates how to load, initialize, and use the model for text generation. Remember to adhere to the recommended prompt format for optimal performance. The model is best utilized with a structured prompt including system instructions, user input, and an assistant response section.

Model Details and Training

- Model Type: Auto-regressive language model

- Base Model: Llama 2 70B

- Training Dataset: Internal Orca-style dataset

- Training Procedure: Supervised fine-tuning using AdamW optimizer and mixed-precision training (BF16)

Ethical Considerations and Limitations

While Stable Beluga 2 offers significant advancements in LLM technology, it's crucial to acknowledge its limitations and potential risks. Like all LLMs, it may generate inaccurate, biased, or objectionable content. Thorough safety testing and application-specific tuning are essential before deployment. The model's training primarily focused on English, limiting its performance in other languages.

Comparisons with Other LLMs

Compared to other LLMs of similar size, Stable Beluga 2 demonstrates competitive performance in text generation tasks. Its fine-tuning on an Orca-style dataset gives it an edge in instruction following and complex reasoning. However, direct comparisons require benchmarking against specific tasks and metrics.

Conclusion

Stable Beluga 2 represents a significant step forward in LLM technology. Its ease of use, high-quality text generation, and strong instruction-following capabilities make it a valuable tool for various applications. However, responsible use and awareness of its limitations are paramount.