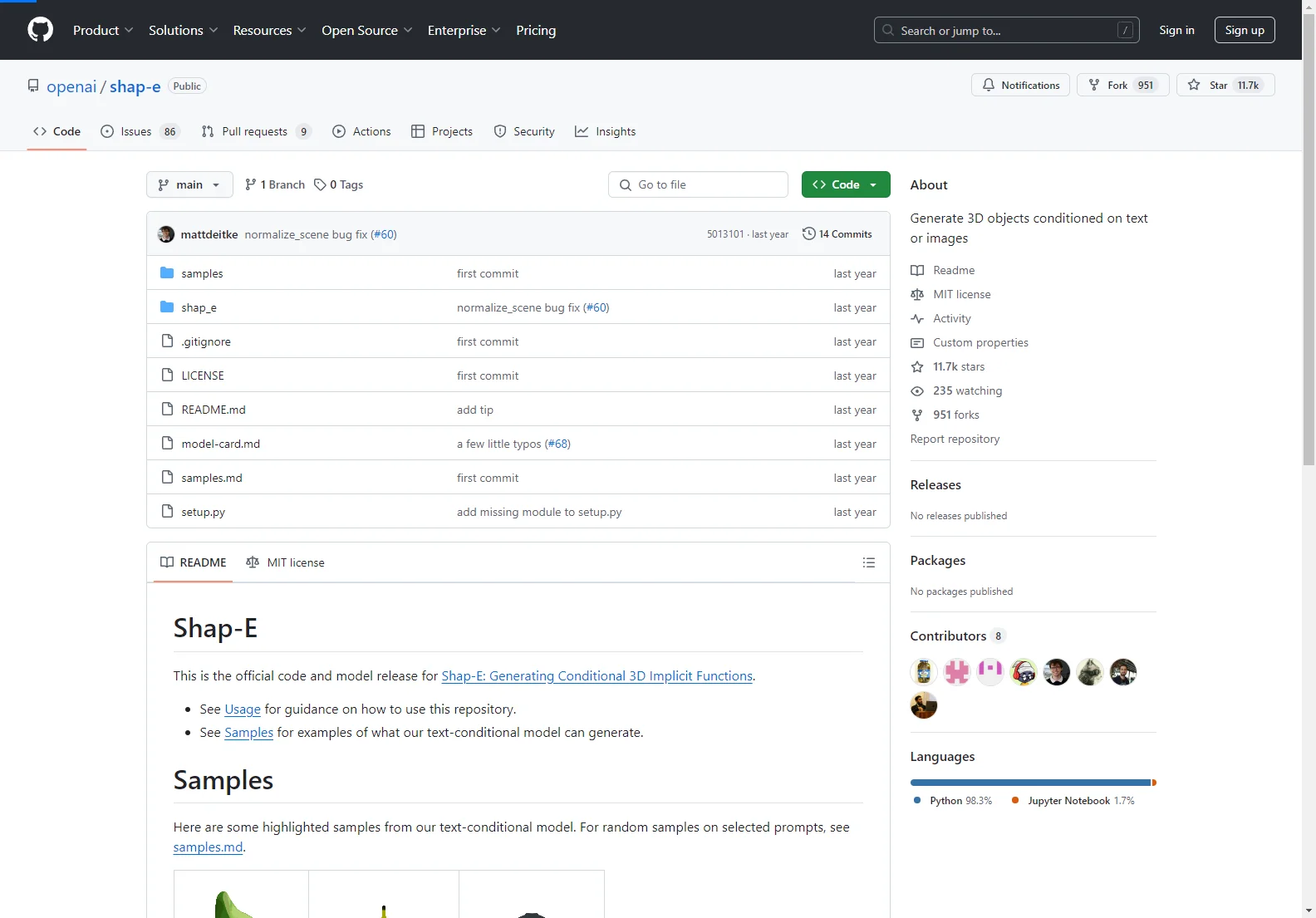

GitHub - openai/shap-e: Generate 3D Objects Conditioned on Text or Images

This markdown file provides an overview of the openai/shap-e GitHub repository, which contains the code and model for Shap-E: a system capable of generating 3D implicit functions conditioned on text or images. This repository allows users to generate 3D models based on textual descriptions or input images.

Key Features

- Text-to-3D Generation: Shap-E can create 3D models from textual descriptions, offering a novel approach to 3D modeling. Users can input a description, and the model will generate a corresponding 3D object.

- Image-to-3D Generation: The system also supports image-to-3D generation. By providing a synthetic view image (ideally with a removed background), Shap-E can reconstruct a 3D model.

- Implicit Function Representation: Shap-E utilizes implicit functions to represent the 3D objects, enabling efficient generation and manipulation.

- Open-Source Availability: The code and model are publicly available on GitHub, fostering collaboration and further development within the AI community.

Usage

The repository provides clear instructions and example notebooks to guide users through the process. Key steps include:

- Installation: Install the necessary packages using

pip install -e .. - Text-to-3D: Use the

sample_text_to_3d.ipynbnotebook to generate 3D models from text prompts. - Image-to-3D: Use the

sample_image_to_3d.ipynbnotebook to generate 3D models from images. Background removal is recommended for optimal results. - Encoding and Rendering: The

encode_model.ipynbnotebook demonstrates how to load, encode, and render 3D models. This requires Blender version 3.3.1 or higher, with the environment variableBLENDER_PATHset correctly.

Samples

The repository showcases several examples of 3D models generated using Shap-E, demonstrating its capabilities in creating diverse and detailed objects from both text and image inputs. Examples include a chair resembling an avocado, an airplane shaped like a banana, and various other creative 3D objects.

Comparisons

Shap-E distinguishes itself from other 3D generation methods through its use of implicit functions and its ability to handle both text and image conditioning. This allows for a more flexible and intuitive approach to 3D model creation compared to methods relying solely on explicit mesh representations or limited input modalities.

Conclusion

Shap-E represents a significant advancement in 3D generation technology, offering a powerful and versatile tool for creating 3D models from various input sources. Its open-source nature encourages further research and development in the field of AI-driven 3D modeling.