What-If Tool: A Visual Guide to Understanding Machine Learning Models

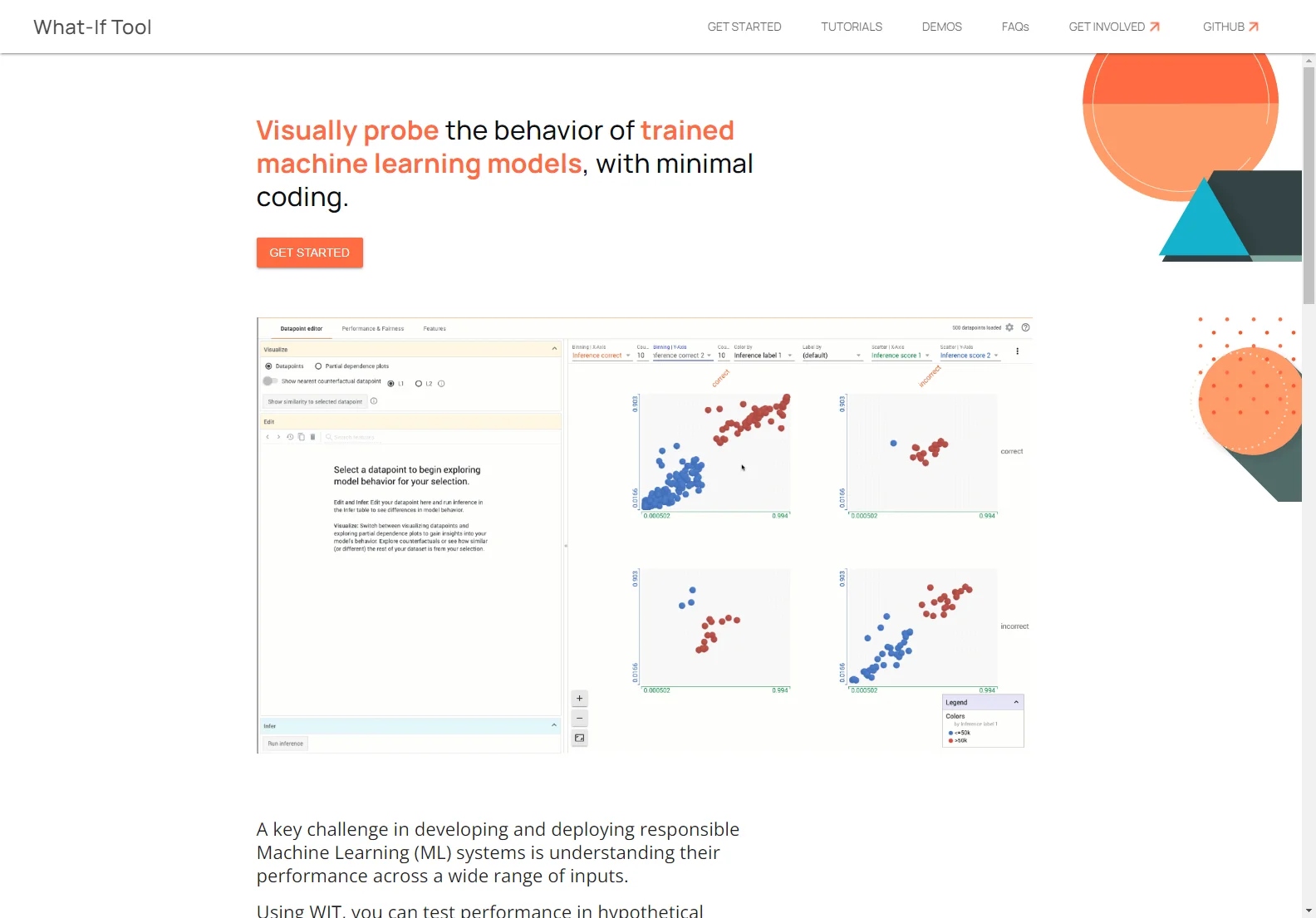

The What-If Tool (WIT) allows you to visually explore the behavior of trained machine learning models with minimal coding. It's a powerful tool for understanding model performance across various inputs, analyzing feature importance, and visualizing model behavior across different models and datasets. This is crucial for developing and deploying responsible ML systems.

Key Features and Capabilities

- Hypothetical Scenario Testing: WIT enables you to test model performance in hypothetical situations, providing insights into how the model might react under different conditions.

- Feature Importance Analysis: Understand which data features have the most significant impact on model predictions.

- Visualization: Visualize model behavior across multiple models and subsets of input data, making complex information easily understandable.

- Fairness Metrics: Analyze model performance through the lens of different ML fairness metrics, helping identify and mitigate potential biases.

- Model Probing: Integrates seamlessly into various workflows, allowing for model probing within your existing development environment.

Supported Platforms and Integrations

WIT is compatible with a range of platforms and frameworks, including:

- Colaboratory notebooks

- Jupyter notebooks

- Cloud AI Notebooks

- TensorBoard

- TFMA Fairness Indicators

It supports various model types and frameworks such as TF Estimators, models served by TF serving, Cloud AI Platform Models, and models that can be wrapped in a Python function.

Data and Task Types

WIT handles diverse data and task types:

- Binary classification

- Multi-class classification

- Regression

- Tabular, image, and text data

Getting Started

WIT simplifies the process of asking and answering questions about models, features, and data points. Its intuitive interface allows for easy exploration and analysis, even for users with limited coding experience.

Contributing to the What-If Tool

The What-If Tool is open-source and welcomes contributions from the community. If you're interested in helping develop and improve WIT, you can find the developer guide and contribute via GitHub.

Updates and Research

Stay informed about the latest updates, new features, and improvements to the What-If Tool through the release notes. For a deeper understanding of the tool's architecture and design, refer to the systems paper presented at IEEE VAST ‘19.

Fairness in AI

The What-If Tool allows exploration of various fairness metrics, providing a practical way to assess and address potential biases in machine learning models. Learn more about the different types of fairness and their implications.