Parti: Pathways Autoregressive Text-to-Image Model

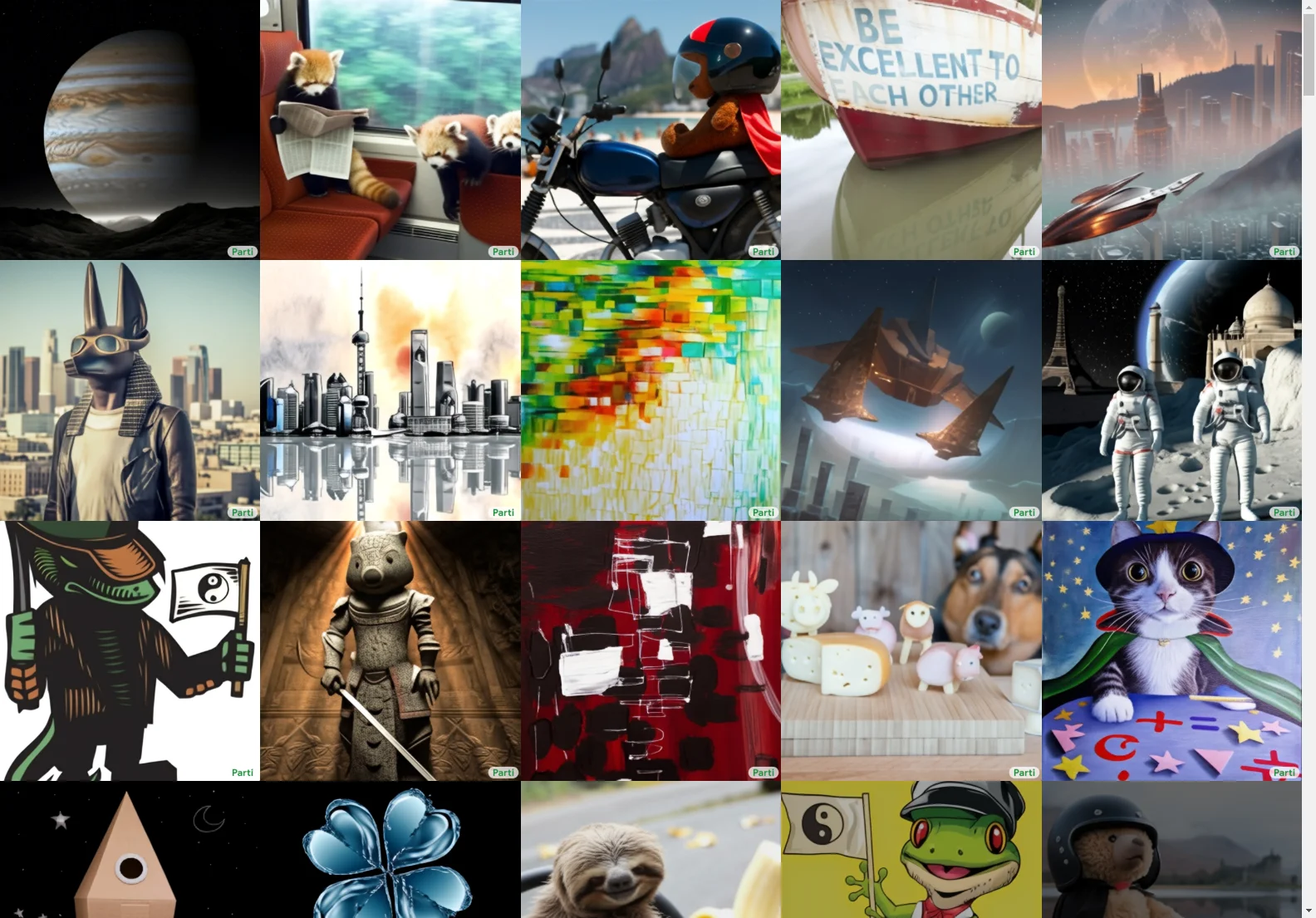

This research paper introduces Parti, a groundbreaking autoregressive text-to-image generation model. Unlike diffusion models, Parti tackles text-to-image generation as a sequence-to-sequence problem, similar to machine translation. This approach leverages advancements in large language models, particularly the benefits of scaling data and model size. The model utilizes the ViT-VQGAN image tokenizer, encoding images into discrete tokens and reconstructing them into high-quality, visually diverse images.

Key Findings

The research demonstrates consistent quality improvements by scaling Parti's encoder-decoder up to 20 billion parameters. Key results include:

- State-of-the-art zero-shot FID score of 7.23 and a finetuned FID score of 3.22 on MS-COCO.

- Effectiveness across diverse categories and difficulty levels, as shown in the Localized Narratives and PartiPrompts benchmark (a new benchmark of 1600+ English prompts released with this research).

Scaling from 350M to 20B parameters yielded substantial improvements in model capabilities and output image quality. Human evaluators consistently preferred the 20B parameter model, particularly for image realism/quality (63.2%) and image-text match (75.9%). The 20B model excels with abstract prompts, those requiring world knowledge, specific perspectives, or intricate writing and symbol rendering.

Composing Real-World Knowledge

Parti excels at generating complex scenes requiring:

- Accurate reflection of world knowledge

- Composition of numerous participants and objects with fine-grained details and interactions

- Adherence to specific image formats and styles

PartiPrompts Benchmark

PartiPrompts (P2) is a new benchmark dataset of over 1600 English prompts designed to evaluate model capabilities across various categories and challenge aspects. It includes both simple and complex prompts, enabling comprehensive model assessment.

Limitations and Future Work

While Parti demonstrates impressive capabilities, limitations exist. The paper discusses these challenges, including failure modes and opportunities for future improvements. Areas of focus include handling negation and absence, and addressing biases present in the training data.

Responsibility and Broader Impact

The research acknowledges potential risks associated with text-to-image models, including bias, safety, disinformation, and impact on creativity and art. The model's training data may contain biases, leading to stereotypical representations. The potential for creating deepfakes and propagating misinformation is also addressed. To mitigate these risks, the researchers have chosen not to publicly release the model, code, or data without further safeguards. A Parti watermark is used on all released images. Future work will focus on bias mitigation strategies and collaboration with artists to responsibly leverage the model's capabilities.

Data Card and Acknowledgements

The paper includes a detailed data card and acknowledges the contributions of numerous researchers and teams at Google Research.