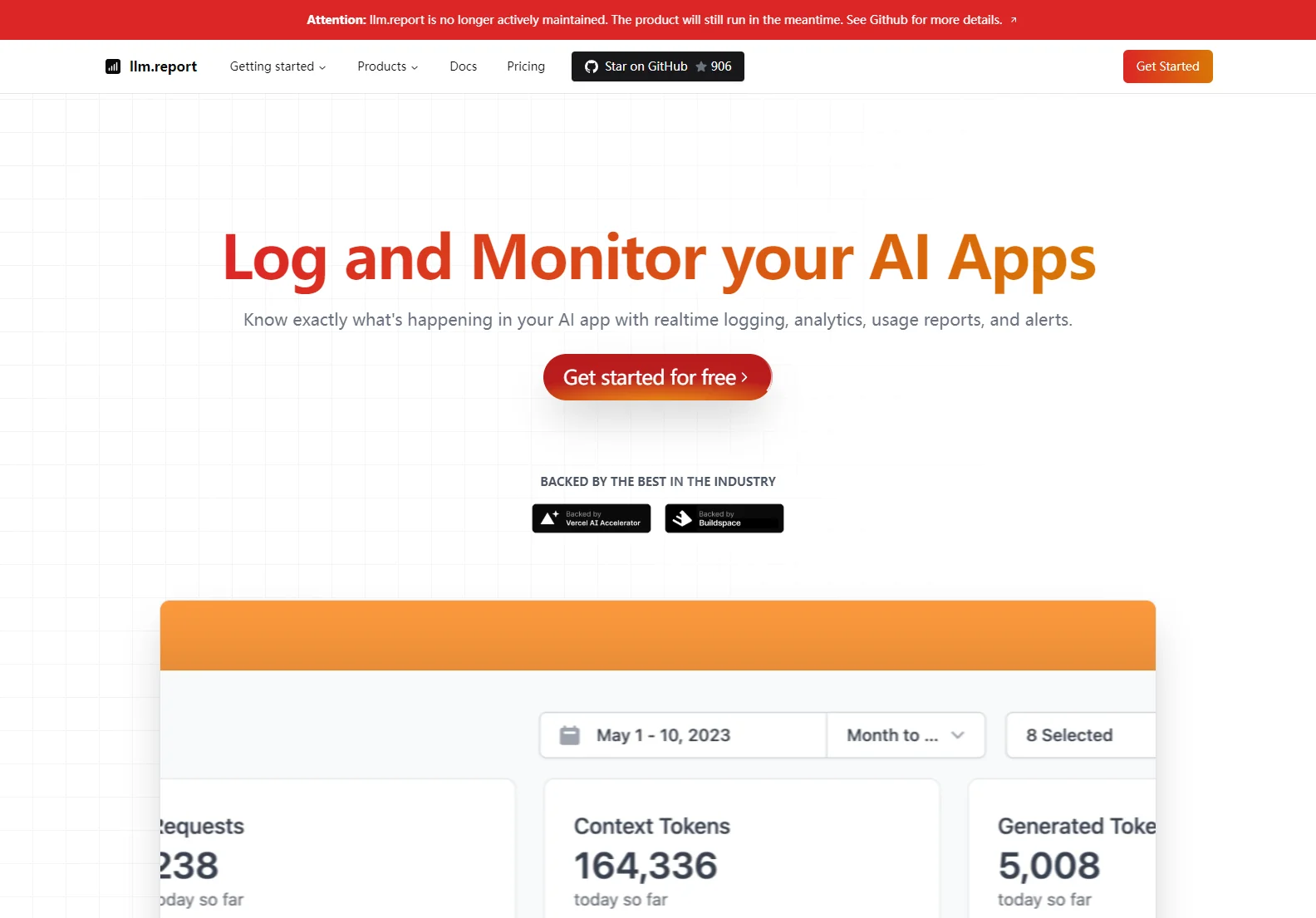

LLM Report: Open-Source Observability for Your OpenAI API

LLM Report is an open-source tool designed to provide real-time observability into your OpenAI API usage. It offers a simple, no-install dashboard that visualizes your API costs, prompts, and completions, empowering you to optimize your spending and enhance your workflow.

Key Features

- Real-time Dashboard: Instantly view your OpenAI API usage data without any setup or installations.

- Cost Analysis: Analyze your cost per user and identify areas for potential savings.

- Prompt and Completion Logging: Log your prompts and completions to understand your model's performance and identify areas for improvement.

- Token Usage Optimization: Track your token usage to minimize costs and optimize your model's efficiency.

- Open-Source and Community-Driven: Contribute to the development and improvement of LLM Report through its active open-source community.

Benefits

- Cost Savings: Identify and reduce unnecessary spending on your OpenAI API usage.

- Improved Efficiency: Optimize your prompts and completions to enhance model performance.

- Enhanced Understanding: Gain a deeper understanding of your LLM usage patterns.

- Community Support: Benefit from the support and collaboration of a vibrant open-source community.

Getting Started

Simply enter your OpenAI API key, and LLM Report will automatically fetch and display your data. No installations or complex configurations are required.

Comparisons

While several other tools offer OpenAI API analytics, LLM Report distinguishes itself through its open-source nature, ease of use, and real-time dashboard. Unlike some proprietary solutions, LLM Report provides complete transparency and allows for community contributions, ensuring ongoing improvements and feature additions.

Conclusion

LLM Report provides a valuable and accessible solution for anyone using the OpenAI API. Its open-source nature, coupled with its user-friendly interface and powerful analytics, makes it an essential tool for optimizing costs, improving efficiency, and gaining valuable insights into your LLM usage.