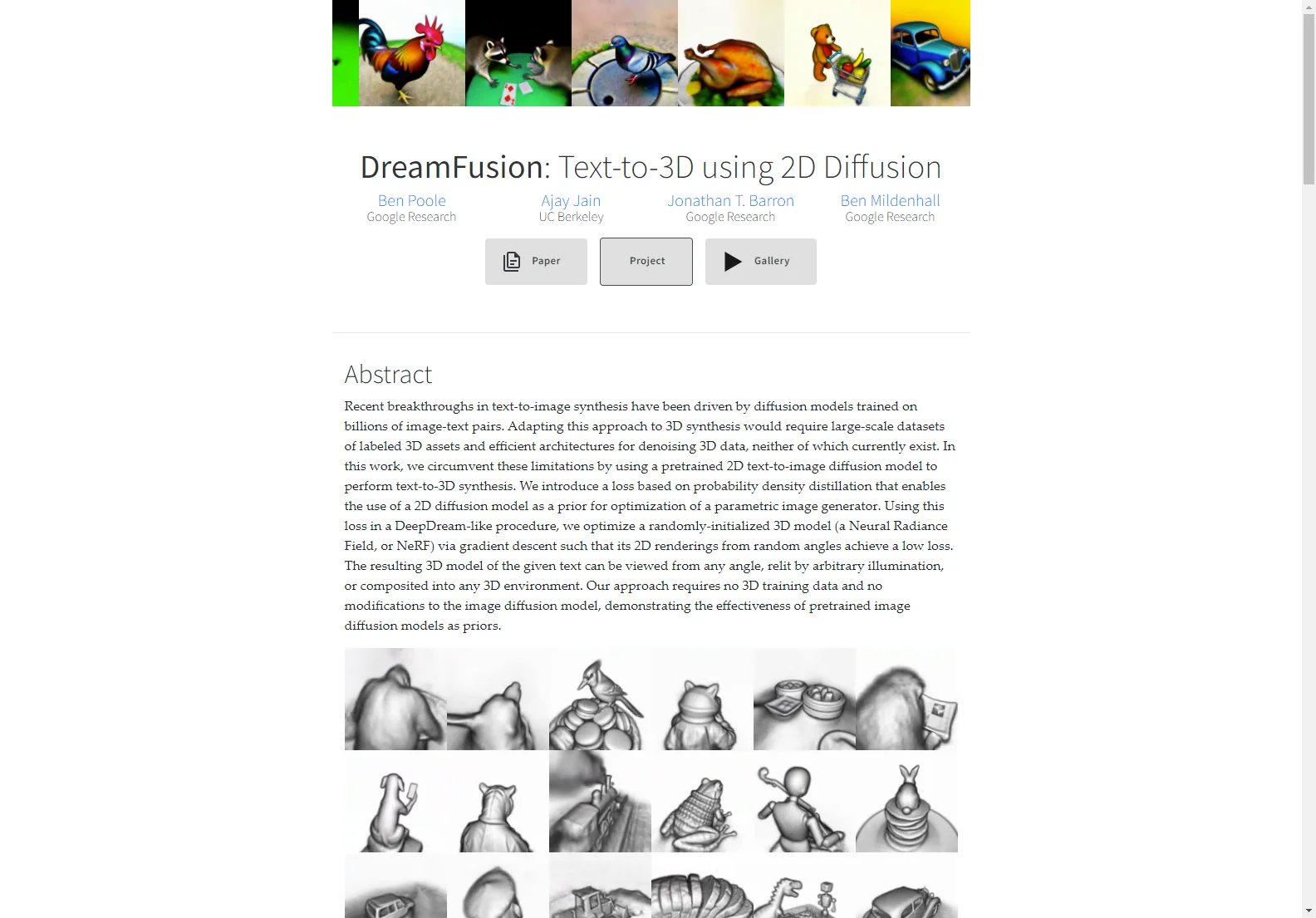

DreamFusion: Text-to-3D Using 2D Diffusion

DreamFusion is a groundbreaking approach to text-to-3D synthesis that leverages the power of pretrained 2D text-to-image diffusion models. Unlike traditional methods requiring massive 3D datasets, DreamFusion cleverly uses a 2D diffusion model as a prior for optimizing a 3D model, eliminating the need for extensive 3D training data. This innovative technique allows for the generation of high-fidelity 3D objects and scenes from text descriptions.

Key Features and Capabilities

- Text-to-3D Generation: Transform text prompts into realistic 3D models with accurate depth, normals, and appearance.

- High-Fidelity Results: Generate detailed and visually appealing 3D objects with fine-grained textures and realistic lighting.

- Relightable Objects: The generated 3D models can be relit under various lighting conditions, offering flexibility in post-processing and rendering.

- Neural Radiance Field (NeRF) Representation: DreamFusion utilizes NeRFs to represent the 3D models, enabling efficient rendering from arbitrary viewpoints.

- No 3D Training Data Required: The method bypasses the need for large-scale 3D datasets, making it highly accessible and efficient.

- Mesh Export Capability: Generated NeRF models can be exported as meshes for easy integration into various 3D software.

How DreamFusion Works

DreamFusion employs a novel approach called Score Distillation Sampling (SDS). SDS allows for the optimization of samples in an arbitrary parameter space (in this case, 3D space) by using a 2D diffusion model as a guide. The process involves optimizing a randomly initialized 3D model (a NeRF) to minimize the difference between its 2D renderings and the output of the pretrained 2D text-to-image diffusion model. This optimization process is guided by a loss function based on probability density distillation.

Additional regularizers and optimization strategies are incorporated to enhance the geometry and overall quality of the generated 3D models. The resulting NeRFs are coherent and exhibit high-quality normals, surface geometry, and depth, allowing for realistic relighting using a Lambertian shading model.

Comparisons with Other AI Products

DreamFusion stands out from other text-to-3D methods by its reliance on pretrained 2D diffusion models. This eliminates the need for extensive 3D training data, a significant advantage over methods that require large and carefully curated 3D datasets. The resulting efficiency and accessibility make DreamFusion a powerful tool for a wider range of users.

Applications

DreamFusion has a wide range of potential applications, including:

- Game Development: Creating realistic 3D assets for video games.

- Film and Animation: Generating high-quality 3D models for visual effects and animation.

- Architectural Visualization: Creating realistic 3D models of buildings and environments.

- Product Design: Rapid prototyping and visualization of new product designs.

Conclusion

DreamFusion represents a significant advancement in text-to-3D synthesis. Its innovative approach, leveraging pretrained 2D diffusion models, opens up new possibilities for generating high-fidelity 3D content from text descriptions, making it a valuable tool for various creative and technical applications.